Intro: why bother?

Over the past few years, it turns out, three of the books that most influenced my intellectual journey were written by anthropologists. This comes as something of a surprise, as I find myself in the final stages of a highly quantitative, data- and network science heavy Ph.D. programme. The better I become at constructing mathematical models and building quantitatively testable hypotheses around them, the more I find myself fascinated by the (usually un-quantitative) way of thinking great anthro research deploys.

This raises two questions. The first one is: why? What is calling to me from in there? The second one is: can I use it? Could one, at least in principle, see the human world simultaneously as a network scientist and as an anthropologist? Can I do it in practice?

The two questions are related at a deep level. The second one is hard, because the two disciplines simplify human issues in very different ways: they each filter out and zoom in to different things. Also, what counts as truth is different. Philosophers would say that network science and anthropology have different ontologies and different epistemologies. In other words, on paper, a bad match. The first one, of course is that this same difference makes for some kind of added value. Good anthro people see on a wavelength that I, as a network scientist, am blind to. And I long for it… but I do not want to lose my own discipline’s wavelength.

Before I attempt to answer these questions, I need to take a step back, and explain why I chose network science as my main tool to look at social and economic phenomena in the first place. I’m supposed to be an economist. Mainstream economists do not, in general, use networks much. They imagine that economic agents (consumers, firms, labourers, employers…) are faced with something called objective functions. For example, if you are a consumer, your objective is pleasure (“utility”). The argument of this function are things that give you pleasure, like holidays, concert tickets and strawberries. Your job is, given how much money you have, to figure our exactly which combination of concert tickets and strawberries will yield the most pleasure. The operative word is “most”: formally, you are maximising your pleasure function, subject to your budget constraint. The mathematical tool for maximising functions is calculus: and calculus is what most economists do best and trust the most.

This way of working is mathematically plastic. It allows scholars to build a consistent array of models covering just about any economic phenomenon. But it has a steep price: economic agents are cast as isolated. They do not interact with each other: instead, they explore their own objective functions, looking for maxima. Other economic agents are buried deep inside the picture, in that they influence the function’s parameters (not even its variables). Not good enough. The whole point of economic and social behaviour is that involves many people that coordinate, fight, trade, seduce each other in an eternal dance. The vision of isolated monads duly maximising functions just won’t cut it. Also, it flies in in the face of everything we know about cognition, and on decades of experimental psychology.

The networks revolution

You might ask how is it that economics insists on such a subpar theoretical framework. Colander and Kupers have a great reconstruction of the historical context in which this happened, and how it got locked in with university departments and policy makers. What matters to the present argument is this: I grasped at network science because it promised a radical fix to all this. Networks have their own branch of math: per se, they are no more relevant to the social world than calculus is. But in the 1930s, a Romanian psychiatrist called Jacob Moreno came up with the idea that the shape of relationships between people could be the object of systematic analysis. We now call this analysis social network analysis, or SNA.

Take a moment to consider the radicality and elegance of this intellectual move. Important information about a person is captured by the pattern of her relationships with others, whoever the people in question are. Does this mean, then, that individual differences are unimportant? It seems unlikely that Moreno, a practicing psychiatrist, could ever hold such a bizarre belief. A much more likely interpretation of social networks is that an individual’s pattern of linking to others, in a sense, is her identity. That’s what a person is.

Three considerations:

- The ontological implications of SNA are polar opposites of those of economics. Economists embrace methodological individualism: everything important in identity (individual preferences, for consumer theory; a firm’s technology, in production theory) is given a priori with respect to economic activity. In sociometry, identity is constantly recreated by economic and social interaction.

- The SNA approach does not rule out the presence of irreducible differences across individuals. A few lines above I stated that an individual’s pattern of linking to others, in a sense, is her identity. By “in a sense” I mean this: it is the part of the identity that is observable. This is a game changer: in economics, individual preferences are blackboxed. This introduces the risk of economic analysis becoming tautologic. If you observe an economic system that seems to plunge people into misery and anxiety, you can always claim this springs directly from people maximising their own objective functions because, after all, you can’t know what they are. This kind of criticism is often levelled to neoliberal thinkers. But social networks? They are observable. They are data. No fooling around, no handwaving. And even though there remains an unobservable component of identity, modern statistical techniques like fixed effects estimation can make system-level inferences on what is observable (though they were invented after Moreno’s times).

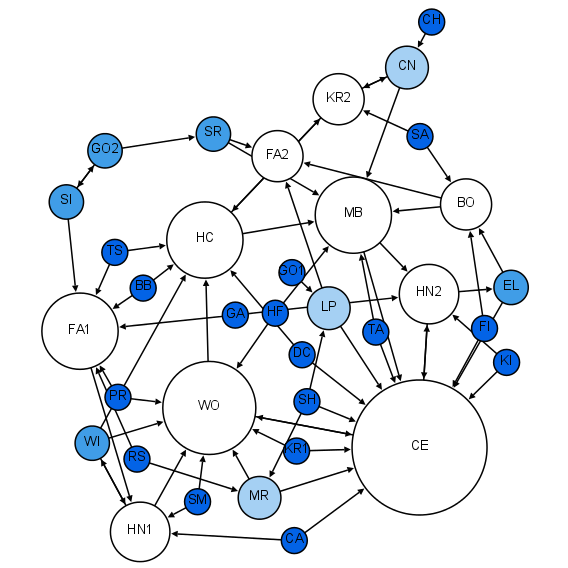

- Moreno’s work is all the more impressive because the mathematical arsenal around networks was then in its infancy. The very first network paper was published by Euler in 1736, but it seems to have been considered a kind of amusing puzzle, and left brewing for over a century. In the times of Moreno there had been significant progress in the study of trees, a particular class of graphs used in chemistry. But basically Moreno relied on visual representation of his social networks, that he called sociograms, to draw systematic conclusions.

By Martin Grandjean (Own work), strictly based on Moreno, 1934 [CC BY-SA 4.0 (http://creativecommons.org/licenses/by-sa/4.0)], via Wikimedia Commons

Understanding research methods in anthropology

As novel as networks science felt to me, anthropology is far stranger. From where I stand, it breaks off from scholarship as I was trained to understand it in three places. These are: how it treats individuals; how it treats questions; and what counts as legitimate answers.

Spotlight on individuals

A book written by an anthropologist is alive with actual people. It resonates with their voices, with plenty of quotations; the reader is constantly informed of their whereabouts and even names. Graeber, for example, towards the beginning of Debt introduces a fictitious example of bartering deal between two men, Henry and Joshua; a hundred pages later he shows us a token of credit issued by an actual 17th century English shopkeeper, actually called Henry. This historical Henry did his business in a village called Stony Stratford, in Buckinghamshire. The token is there to make the case that the real Henry would do business in a completely different way than the fictional one (credit, not barter). 300 pages later (after sweeping over five millennia of economic, religious and cultural history in two continents) he informs us that Henry’s last name was Coward, that he also engaged in some honourable money lending, and that he was held in high standing by his neighbours. To prove the case, he quotes the writing of one William Stout, a Quaker businessman from Lancashire, who started off his career as Henry’s apprentice.

To an economist, this is theatrical, even bizarre. The author’s point is that it was normal for early modern trade in European villages to take place in credit, rather than cash. Why do we need to know this particular’s shopkeeper’s name and place of establishment, and the name and birthplace of his apprentice as well? Would the argument not be even stronger, if it applied to general trends, to the average shopkeeper, instead of this particular man?

I am not entirely sure what is going on here. But I think it is this: to build his case, the author had to enter in dialogue with real people, and make an effort to see things through their eyes. Ethnographers do this by actually spending time with living members of the groups they wish to study; in the case of works like Debt he appears to spend a great deal of time reading letters and diaries, and piecing things together (“Let me tell you how Cortés had gotten to be in that predicament…”). If the reader wishes to fully understand and appreciate the argument, she, too, needs to make that effort. And that means spending time with informants, even in the abridged form of reading the essay, and getting to know them. So, detailed descriptions of individual people are a device for empathy and understanding.

All this makes reading a good anthro book great fun. It also is the opposite of what network scientists do: we build models with identical agents to tease out the effect of the pattern of linking. Anthropologists zoom in on individual agents and make a point of keeping track of their unique trajectories and predicaments.

Asking big questions

Good anthropologists are ambitious, fearless. They zero in on big, hairy, super-relevant questions and lay siege to them. Look at James Scott:

I aim, in what follows, to provide a convincing account of the logic behind the failure of some of the great utopian social engineering schemes of the twentieth century.

That’s a big claim right there. It means debugging the whole of development policies, most urban regeneration projects, villagization of agriculture schemes, and the building of utopian “model cities” like Kandahar or Brasilia. It means explaining why large, benevolent, evidence-based bureaucracies like the United Nations, the International Monetary Fund and the World Bank fail so often and so predictably. Yet Scott, in his magisterial Seeing Like a State, pushes on – and, as far as I am concerned, delivers the goods. David Graeber’s own ambition is in the title: Debt – The first 5,000 years.

Economists don’t do that anymore.You need to be very very senior (Nobel-grade, or close) to feel like you can tackle a big question. Researchers are encouraged to act as laser beams rather than searchlights, focusing tightly on well-defined problems. It was not always like that: Keynes’s masterpiece is immodestly titled The General Theory of Employment, Interest and Money. But that was then, and this is now.

What counts as “evidence”?

Ethnographic analysis – the main tool in the anthropologist’s arsenal – is not exactly science. Science is about building a testable hypothesis, and then testing it. But testing implies reproducibility of experiments, and that is generally impossible for meso- and macroscale social phenomena, because they have no control group. You cannot re-run the Roman Empire 20 times to see what would have happened if Constantine had not embraced the christian faith. This kind of research is more like diagnosis in medicine: pathologies exist as mesoscale phenomena and studying them helps. But in the end each patient is different, and doctors want to get it right this time, to heal this patient.

How do you do rigorous analysis when you can’t do science? When I first became intrigued with ethnography, someone pointed me to Michael Agar’s The professional stranger. This book started out as a methodological treatise for anthropologists in the field; much later, Agar revisited it and added a long chapter to account for how the discipline had evolved since its original publication. This makes it a sort of meta-methodological guide. Much of Agar’s argument in the additional chapter is dedicated to cautiously suggesting that ethnographers can maintain some kind of a priori categories as they start their work. This, he claims, does not make an ethnographer a “hypothesis-testing researcher”, which would obviously be really bad. When I first read this expression, I did a double take: how could a researcher do anything else than test hypotheses? But no: a “hypothesis-testing researcher” is, to ethnographers, some kind of epistemological fascist. What they think of as good epistemology is to let patterns emerge from immersion in, and identification with, the world in which informants live. They are interested in finding out “what things look like from out here”.

It sounds pretty vague. And yet, good anthropologists get results. They make fantastic applied analysts, able to process diverse sources of evidence from archaeological remains to statistical data, and tie them up into deep, compelling arguments about what we are really looking at when we consider debt, or the metric system, or the particular pattern with which cypress trees are planted in certain areas. A hard-nosed scientist will scoff at many of the pieces (for example, Graeber writes things like “you can’t help feeling that there’s more to this story”. Good luck getting a sentence like that past my thesis supervisor), but those pieces make a very convincing whole. To anthropologists, evidence comes in many flavours.

Coda: where does it all go?

You can see why interdisciplinary research is avoided like the plague by researchers who wish to publish a lot. Different disciplines see the world with very different eyes; combining them requires methodological innovation, with a high risk of displeasing practitioners of both.

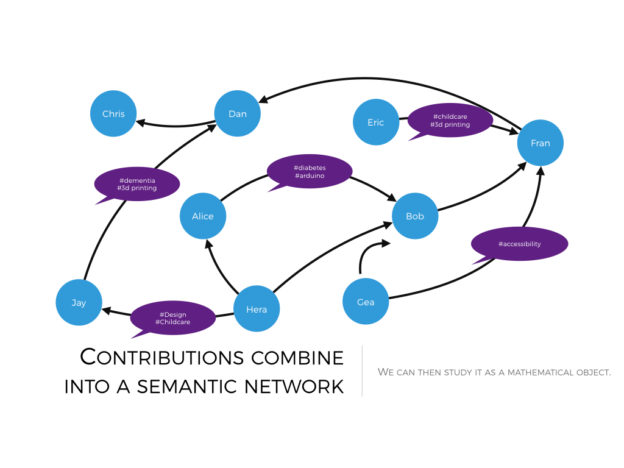

But I have no particular need to publish, and remain fascinated by the potential of combining ethnography with network science for empirical research. I have a specific combination in mind: large scale online conversations, to be harvested with ethnographic analysis. Harvested content is then rendered as a type of graph called a semantic social network, and reduced and analysed via standard quantitative methods from network science. With some brilliant colleagues, we have outlined this vision in a paper (a second one is in the pipeline) so I won’t repeat it here.

I want, instead, to remark how this type of work is, to me, incredibly exciting. I see a potential to combine ethnography’s empathy and human centricity, anthropology’s fearlessness and network science’s exactness, scalability and emphasis on the mesoscale social system. The idea of “linking as identity” is a good example of methodological innovation: it reconciles the idea of identity as all-important with that of interdependence within the social context, and it enables simple(r) quantitative analysis. All this implies irreducible methodological tensions, but I think in most cases they can be managed (not solved) by paying attention to the context. The work is hard, but the rewards are substantial. For all the bumps in the road, I am delighted that I can walk this path, and look forward to what lies beyond the next turns.

Photo credit: McTrent on flickr.com